Create EKS Cluster

Table of contents

Amazon EKS clusters can be deployed in various ways.

Deploy by clicking on AWS console

Deploy by using IaC(Infrastructure as Code) tool such as AWS CloudFormation> or AWS CDK

Deploy by using eksctl

Deploy to Terraform, Pulumi, Rancher, etc.

In this lab, we will create an EKS cluster using eksctl.

Create EKS Cluster with eksctl

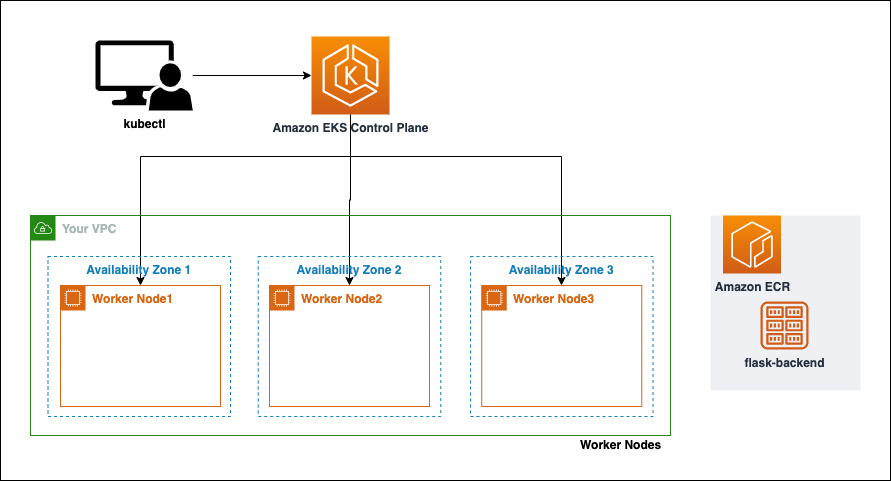

The architecture from now

the architecture of the services configured from now would be creating a Kubernetes cluster with eksctl like below.

Creating EKS Cluster with eksctl

If you use eksctl to execute this command (eksctl create cluster) without giving any setting values, the cluster is deployed as a default parameter.

However, we will create configuration files to customize some values and deploy it. In later labs, when you create Kubernetes’ objects, you create a configuration file that is not just created with the kubectl CLI. This has the advantage of being able to easily identify and manage the desired state of the objects specified by the individual.

Paste the values below in the root folder(/home/ec2-user/environment) location.

cd ~/environmentcat << EOF > eks-demo-cluster.yaml --- apiVersion: eksctl.io/v1alpha5 kind: ClusterConfig metadata: name: eks-demo # EKS Cluster name region: ${AWS_REGION} # Region Code to place EKS Cluster version: "1.23" vpc: cidr: "10.0.0.0/16" # CIDR of VPC for use in EKS Cluster nat: gateway: HighlyAvailable managedNodeGroups: - name: node-group # Name of node group in EKS Cluster instanceType: m5.large # Instance type for node group desiredCapacity: 3 # The number of worker node in EKS Cluster volumeSize: 10 # EBS Volume for worker node (unit: GiB) privateNetworking: true ssh: enableSsm: true iam: withAddonPolicies: imageBuilder: true # Add permission for Amazon ECR albIngress: true # Add permission for ALB Ingress cloudWatch: true # Add permission for CloudWatch autoScaler: true # Add permission Auto Scaling ebs: true # Add permission EBS CSI driver cloudWatch: clusterLogging: enableTypes: ["*"] EOFIf you look at the cluster configuration file, you can define policies through iam.attachPolicyARNs and through iam.withAddonPolicies, you can also define add-on policies. After the EKS cluster is deployed, you can check the IAM Role of the worker node instance in EC2 console to see added policies.

Click here to see the various property values that you can give to the configuration file.

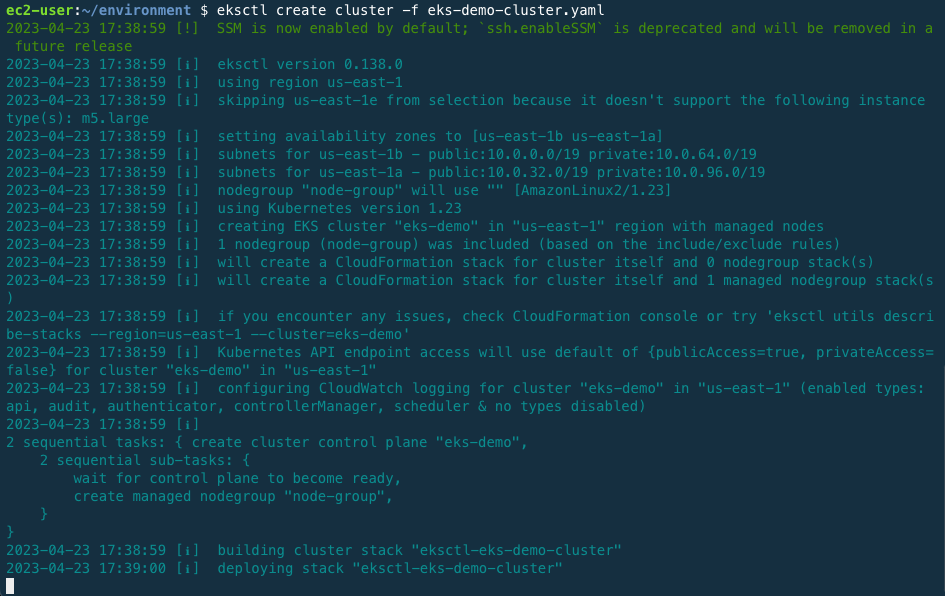

Using the commands below, deploy the cluster.

eksctl create cluster -f eks-demo-cluster.yaml

The cluster takes approximately 15 to 20 minutes to fully be deployed. You can see the progress of your cluster deployment in AWS Cloud9 terminal and also can see the status of events and resources in AWS CloudFormation console.

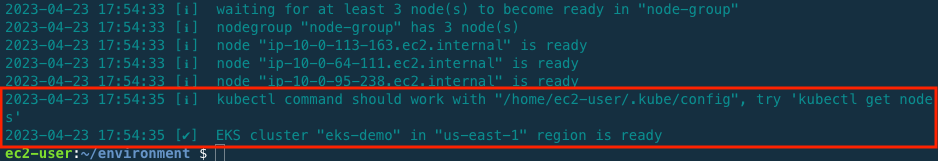

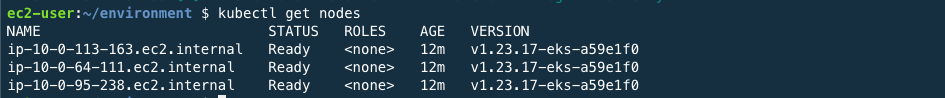

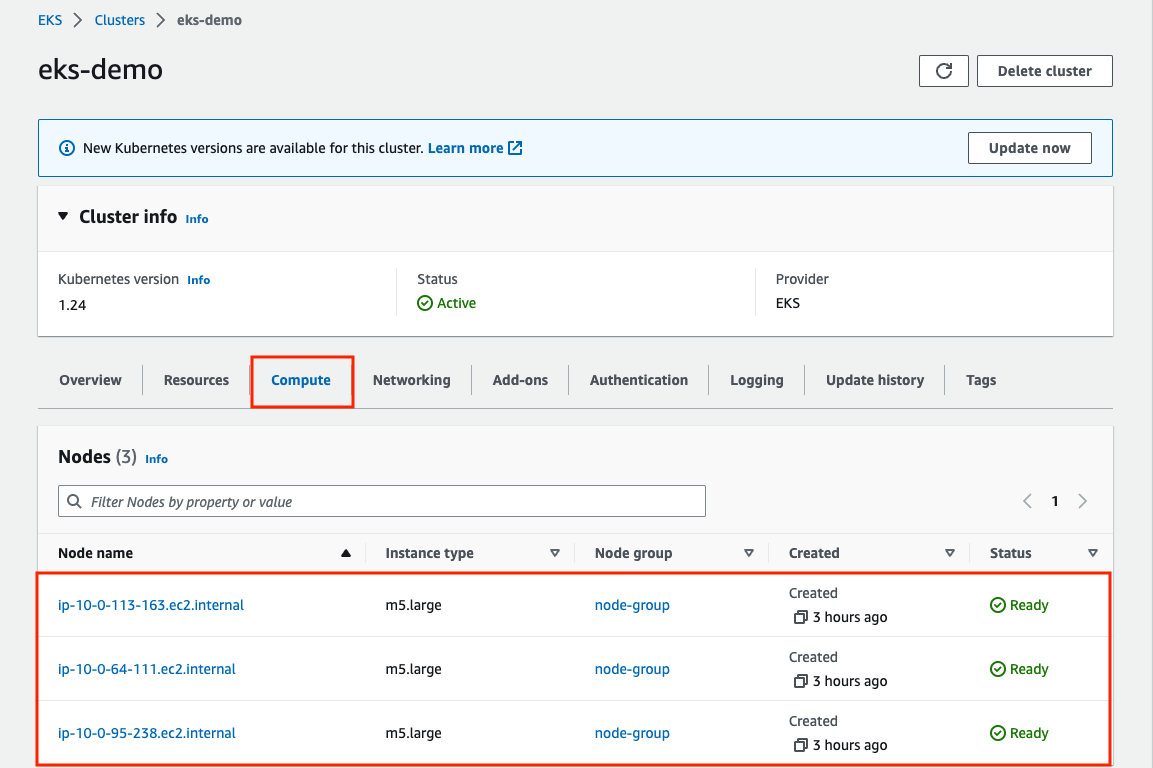

When the deployment is completed, use command below to check that the node is properly deployed.

kubectl get nodes

Also, you can see the cluster credentials added in ~/.kube/config.

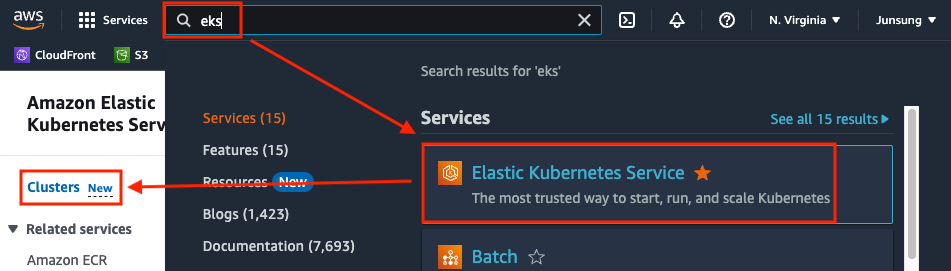

(Option) AA Console Credential

Attach Console Credential

The EKS cluster uses IAM entity(user or role) for cluster access control. The rule runs in a ConfigMap named aws-auth. By default, IAM entities used to create clusters are automatically granted system:masters privilege of the cluster RBAC configuration in the control plane.

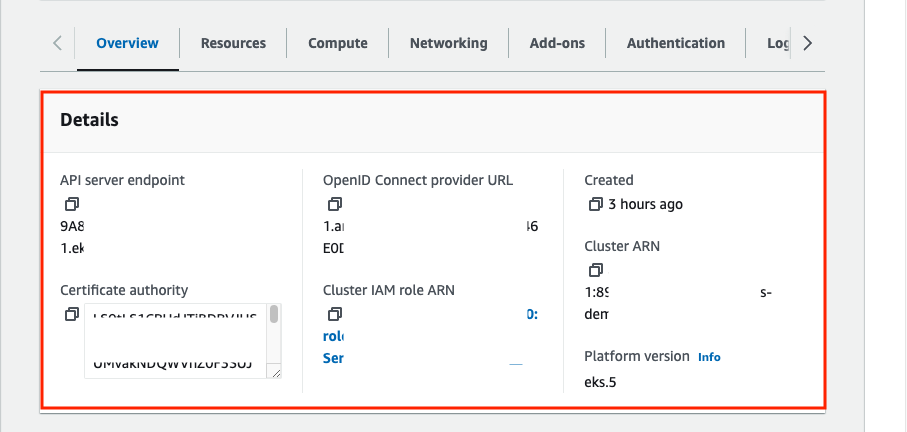

If you access the Amazon EKS console in the current state, you cannot check any information as below.

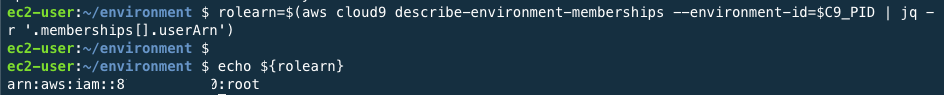

When you created the cluster through IAM credentials on Cloud9 in Create EKS Cluster with eksctl> chapter, so you need to determine the correct credential(such as your IAM Role not Cloud9 credentials) to add for your AWS EKS Console access.

Use the command below to define the role ARN(Amazon Resource Number).

rolearn=$(aws cloud9 describe-environment-memberships --environment-id=$C9_PID | jq -r '.memberships[].userArn') echo ${rolearn}

{: .warning )

assumedrolename=$(echo ${rolearn} | awk -F/ '{print $(NF-1)}') rolearn=$(aws iam get-role --role-name ${assumedrolename} --query Role.Arn --output text)Create an identity mapping.

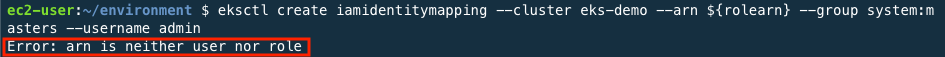

eksctl create iamidentitymapping --cluster eks-demo --arn ${rolearn} --group system:masters --username adminif you see error message after commanding like below. We can choose two options

the way of solving method to fixed is like below.

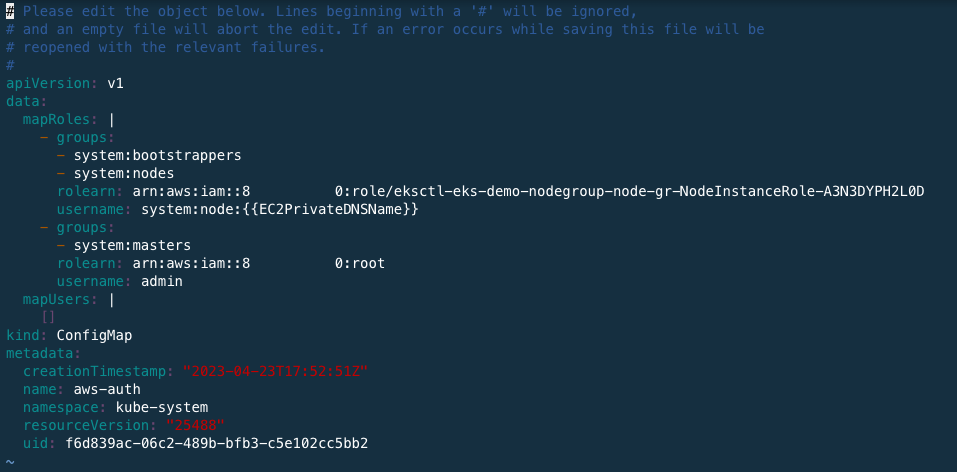

kubectl edit configmap aws-auth -n kube-systemAdd these scripts like below

- groups: - system:masters rolearn: arn:aws:iam::[Account ID]:root username: admin

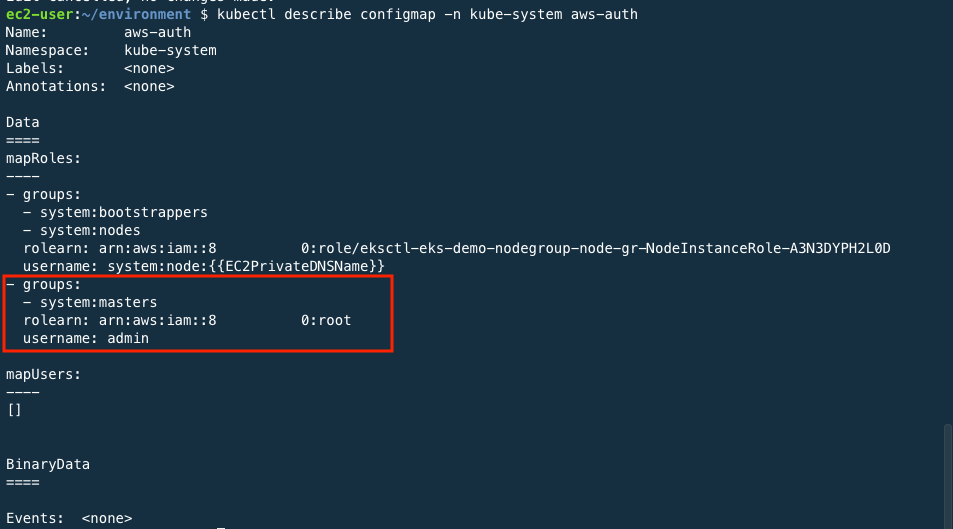

You can check aws-auth config map information through the command below.

kubectl describe configmap -n kube-system aws-auth

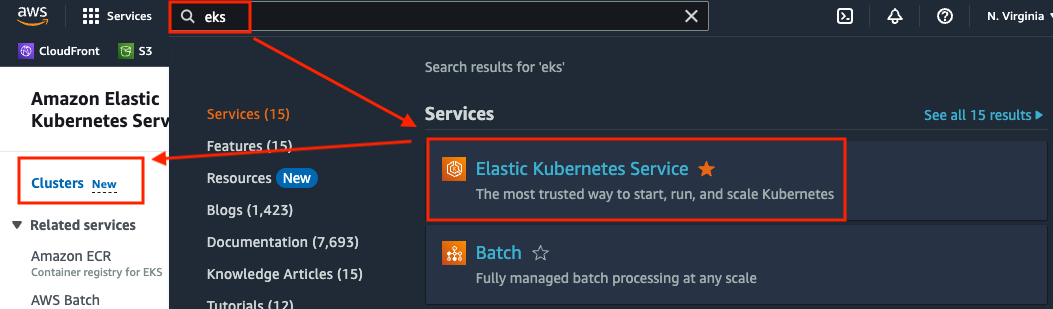

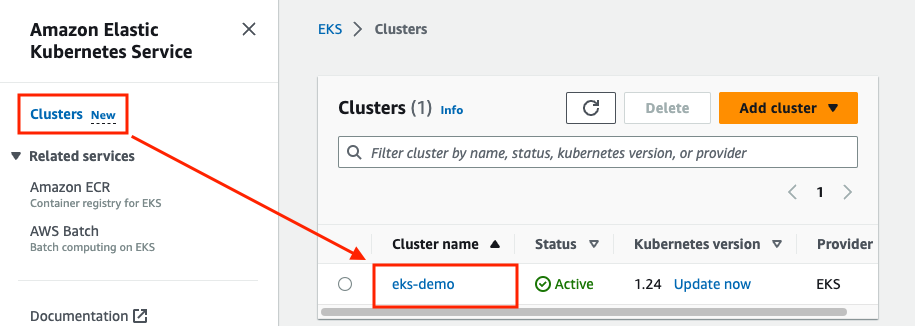

When the above operations are completed, you will be able to get information from the control plane, the worker node, logging activation, and update information in Amazon EKS console.

On the Configuration tab, you can get cluster configuration detail.